We want to learn how to Kubernetes working in the development process. Kubernetes will gives some tags environments until we know the deployment what the labeling.

Kubernetes mechanisms work to ensure the required resources are present in the cluster and reach the desired state. This eliminates the need to manually update and deploy applications, which is time-consuming and can lead to human error.

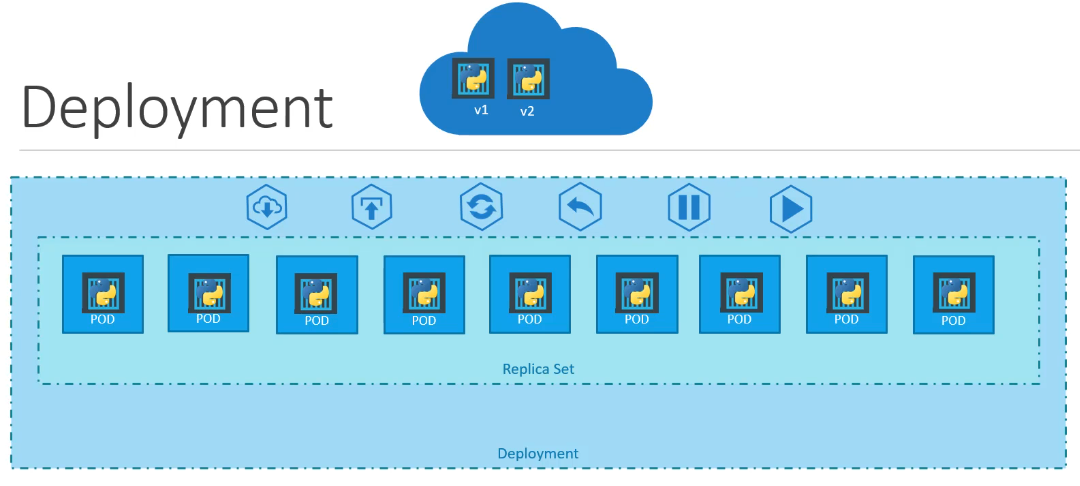

On this occasion we are only trying to deploy single instance PODs from the application we have created. And we will also try to deploy using replication controllers or replica set.

If we look at Kubernetes Deployment below, it is at the top of the hierarchy where we can manage PODs and replica sets in one file..

Create a Kubernetes Deployment

When we want to create a Kubernetes deployment file, the type we create must use

kind: Deployment

The deployment file is the same as before in ReplicaSet but the only difference is the kind field.

We try to create a YAML file with the name deployment-definition.yaml with contents like

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp-deployment

labels:

app: myapp

type: front-end

spec:

template:

metadata:

name: myapp-pod

labels:

app: myapp

type: front-end

spec:

containers:

- name: nginx-container

image: nginx

replicas: 4

selector:

matchLabels:

type: front-end

When finished, try running the command below to create a Kubernetes deployment process.

➜ kubectl create -f code/deployments/deployment-definition.yaml

To see whether the deployment process is running or not, we can see it with this command.

➜ kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

myapp-deployment 4/4 4 4 9s

It can be seen from the terminal that the deployment process is running well and 4 PODs are available.

then we look at the replicaset and its PODs with this command

➜ kubectl get replicaset,pods

NAME DESIRED CURRENT READY AGE

replicaset.apps/myapp-replicaset 4 4 4 4d20h

NAME READY STATUS RESTARTS AGE

pod/myapp-replicaset-l568n 1/1 Running 0 4d20h

pod/myapp-replicaset-ppr52 1/1 Running 0 4d20h

pod/myapp-replicaset-rbhlp 1/1 Running 0 4d20h

pod/myapp-replicaset-rrqc2 1/1 Running 0 4d20h

Everything is running well and the status is Running meaning there are no PODs that have errors or are not running well.

If we want more detail about the information we can deploy, we can use commands.

➜ kubectl describe deployment myapp-deployment

Name: myapp-deployment

Namespace: default

CreationTimestamp: Mon, 11 Mar 2024 14:15:46 +0700

Labels: app=myapp

type=front-end

Annotations: deployment.kubernetes.io/revision: 1

Selector: type=front-end

Replicas: 4 desired | 4 updated | 4 total | 4 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=myapp

type=front-end

Containers:

nginx-container:

Image: nginx

Port: <none>

Host Port: <none>

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

Progressing True NewReplicaSetAvailable

OldReplicaSets: <none>

NewReplicaSet: myapp-replicaset (4/4 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 4m57s deployment-controller Scaled down replica set myapp-replicaset to 4 from 8

And we want to see the overall details of Clusters, PODs, Replicate and Deployment, we can type them with this command.

➜ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/myapp-replicaset-l568n 1/1 Running 0 4d20h

pod/myapp-replicaset-ppr52 1/1 Running 0 4d20h

pod/myapp-replicaset-rbhlp 1/1 Running 0 4d20h

pod/myapp-replicaset-rrqc2 1/1 Running 0 4d20h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 11d

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/myapp-deployment 4/4 4 4 5m59s

NAME DESIRED CURRENT READY AGE

replicaset.apps/myapp-replicaset 4 4 4 4d20h

Update and Rollback Kubernetes Deployments

When we were deploying our service, one day something undesirable happened to the latest version or an error was found which resulted in our service not running properly.

So, we need a Rollout mechanism to return our service to a stable or previous version.

We can do this rollout method with a command

➜ kubectl rollout status deployment/myapp-deployment

deployment "myapp-deployment" successfully rolled out

We have tried rollout deployment, and we can see the commands we have carried out by looking at them

➜ kubectl rollout history deployment/myapp-deployment

deployment.apps/myapp-deployment

REVISION CHANGE-CAUSE

1 <none>

For example, we will simulate when something happens to our deployment that causes an error. Change the deployment YAML file that we created earlier

replicas: 6

So that we can see what the rollout status is, we will first try deleting the previous deployment with this command.

➜ kubectl delete deployment myapp-deployment

deployment.apps "myapp-deployment" deleted

and we try again to recreate it with the command

➜ kubectl create -f code/deployments/deployment-definition.yaml

deployment.apps/myapp-deployment created

If you have created it, immediately run the command below.

➜ kubectl rollout status deployment/myapp-deployment

Waiting for deployment "myapp-deployment" rollout to finish: 0 of 6 updated replicas are available...

Waiting for deployment "myapp-deployment" rollout to finish: 1 of 6 updated replicas are available...

Waiting for deployment "myapp-deployment" rollout to finish: 2 of 6 updated replicas are available...

Waiting for deployment "myapp-deployment" rollout to finish: 3 of 6 updated replicas are available...

Waiting for deployment "myapp-deployment" rollout to finish: 4 of 6 updated replicas are available...

Waiting for deployment "myapp-deployment" rollout to finish: 5 of 6 updated replicas are available...

deployment "myapp-deployment" successfully rolled out

We can see the status of each PODs running one by one for deployment. If we want to save the commands that we have used then we can do so when running the deployment, we add --record as below.

➜ kubectl create -f code/deployments/deployment-definition.yaml --record

Flag --record has been deprecated, --record will be removed in the future

deployment.apps/myapp-deployment created

If we look at the --record flag, in the future it will be decreated by the Kubernetes team, but currently we can still use it.

Then we look at the command history that we have saved with the command below.

➜ kubectl rollout history deployment/myapp-deployment

deployment.apps/myapp-deployment

REVISION CHANGE-CAUSE

1 kubectl create --filename=code/deployments/deployment-definition.yaml --record=true

We try to change the YAML file that we have created by changing the image version of the container that is set, for example we are going to upgrade to version 1.18 then we change it with this command.

➜ kubectl edit deployment myapp-deployment --record

Flag --record has been deprecated, --record will be removed in the future

deployment.apps/myapp-deployment edited

then look for containers image and add it to look like this

- image: nginx:1.18

And the results will be seen in the description as below.

➜ kubectl describe deployment myapp-deployment

Name: myapp-deployment

Namespace: default

CreationTimestamp: Mon, 11 Mar 2024 14:50:06 +0700

Labels: app=myapp

type=front-end

Annotations: deployment.kubernetes.io/revision: 2

kubernetes.io/change-cause: kubectl edit deployment myapp-deployment --record=true

Selector: type=front-end

Replicas: 6 desired | 6 updated | 6 total | 6 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=myapp

type=front-end

Containers:

nginx-container:

Image: nginx:1.18

Port: <none>

Host Port: <none>

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

Progressing True NewReplicaSetAvailable

OldReplicaSets: myapp-deployment-84ccc5558 (0/0 replicas created)

NewReplicaSet: myapp-deployment-7cd6f9c5d4 (6/6 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 7m58s deployment-controller Scaled up replica set myapp-deployment-84ccc5558 to 6

Normal ScalingReplicaSet 70s deployment-controller Scaled up replica set myapp-deployment-7cd6f9c5d4 to 2

Normal ScalingReplicaSet 70s deployment-controller Scaled down replica set myapp-deployment-84ccc5558 to 5 from 6

Normal ScalingReplicaSet 70s deployment-controller Scaled up replica set myapp-deployment-7cd6f9c5d4 to 3 from 2

Normal ScalingReplicaSet 45s deployment-controller Scaled down replica set myapp-deployment-84ccc5558 to 4 from 5

Normal ScalingReplicaSet 45s deployment-controller Scaled up replica set myapp-deployment-7cd6f9c5d4 to 4 from 3

Normal ScalingReplicaSet 43s deployment-controller Scaled down replica set myapp-deployment-84ccc5558 to 3 from 4

Normal ScalingReplicaSet 43s deployment-controller Scaled up replica set myapp-deployment-7cd6f9c5d4 to 5 from 4

Normal ScalingReplicaSet 40s deployment-controller Scaled down replica set myapp-deployment-84ccc5558 to 2 from 3

Normal ScalingReplicaSet 36s (x3 over 40s) deployment-controller (combined from similar events): Scaled down replica set myapp-deployment-84ccc5558 to 0 from 1

In the Annotations field, it appears that we have received an updated revision with the name deployment.kubernetes.io/revision: 2 because we have updated the nginx version of the container image to 1.18.

The information on containers has also changed to look like this

Containers:

nginx-container:

Image: nginx:1.18

For example, when we want to update the container image but it turns out that version is not available or if our service has an error, we will try to simulate it by first updating the image with the command below.

➜ kubectl set image deployment myapp-deployment nginx-container=nginx:1.18-does-not-exist --record

Flag --record has been deprecated, --record will be removed in the future

deployment.apps/myapp-deployment image updated

Let’s look at history first

➜ kubectl rollout history deployment/myapp-deployment

deployment.apps/myapp-deployment

REVISION CHANGE-CAUSE

1 kubectl create --filename=code/deployments/deployment-definition.yaml --record=true

2 kubectl set image deployment myapp-deployment nginx=nginx:1.18-does-not-exist --record=true

3 kubectl set image deployment myapp-deployment nginx-container=nginx:1.18-does-not-exist --record=true

And let’s look at the details after we upgrade with a version image that doesn’t exist, it will look like this

➜ kubectl get deployment,pods,replicaset

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/myapp-deployment 5/6 3 5 16m

NAME READY STATUS RESTARTS AGE

pod/myapp-deployment-7cd6f9c5d4-bg67z 1/1 Running 0 9m15s

pod/myapp-deployment-7cd6f9c5d4-bt7qr 1/1 Running 0 9m15s

pod/myapp-deployment-7cd6f9c5d4-fvnrq 1/1 Running 0 8m45s

pod/myapp-deployment-7cd6f9c5d4-lqdwj 1/1 Running 0 9m15s

pod/myapp-deployment-7cd6f9c5d4-rksfz 1/1 Running 0 8m50s

pod/myapp-deployment-86c74f6c5d-fpv4n 0/1 ImagePullBackOff 0 2m1s

pod/myapp-deployment-86c74f6c5d-pz6hk 0/1 ImagePullBackOff 0 2m1s

pod/myapp-deployment-86c74f6c5d-sfbvb 0/1 ErrImagePull 0 2m1s

NAME DESIRED CURRENT READY AGE

replicaset.apps/myapp-deployment-7cd6f9c5d4 5 5 5 9m15s

replicaset.apps/myapp-deployment-84ccc5558 0 0 0 16m

replicaset.apps/myapp-deployment-86c74f6c5d 3 3 0 2m1s

We can see that in deployment there is a READY status 5/6 which means there is one POD that is in the process of upgrading its version but an error occurs and in UP-TO-DATE it appears that there are 3 new PODs that will run the latest version but an error occurs , then the AVAILABLE status has 5 PODs, which is still in the previous version.

If we look in more detail, we can see 3 PODs with the status ImagePullBackOff or ErrImagePull and 5 with the status Running.

When Kubernetes wants to update a deployment it will actually create a new ReplicaSet until everything is running and then the old ReplicaSet will be deleted. In a different case, when an error occurs it will look like this.

NAME DESIRED CURRENT READY AGE

replicaset.apps/myapp-deployment-7cd6f9c5d4 5 5 5 9m15s

replicaset.apps/myapp-deployment-84ccc5558 0 0 0 16m

replicaset.apps/myapp-deployment-86c74f6c5d 3 3 0 2m1s

So how can we return to the previous version? namely by rolling back with this command.

➜ kubectl rollout undo deployment/myapp-deployment

deployment.apps/myapp-deployment rolled back

Rollback was successfully and we well see back before to see that refer to this command.

➜ kubectl get deployment,pods,replicaset

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/myapp-deployment 6/6 6 6 23m

NAME READY STATUS RESTARTS AGE

pod/myapp-deployment-7cd6f9c5d4-bg67z 1/1 Running 0 17m

pod/myapp-deployment-7cd6f9c5d4-bt7qr 1/1 Running 0 17m

pod/myapp-deployment-7cd6f9c5d4-fvnrq 1/1 Running 0 16m

pod/myapp-deployment-7cd6f9c5d4-lqdwj 1/1 Running 0 17m

pod/myapp-deployment-7cd6f9c5d4-rksfz 1/1 Running 0 16m

pod/myapp-deployment-7cd6f9c5d4-rqcdv 1/1 Running 0 23s

NAME DESIRED CURRENT READY AGE

replicaset.apps/myapp-deployment-7cd6f9c5d4 6 6 6 17m

replicaset.apps/myapp-deployment-84ccc5558 0 0 0 23m

replicaset.apps/myapp-deployment-86c74f6c5d 0 0 0 9m57s

So, the deployment will be return to the original version nginx:1.18

Understanding Kubernetes Networking

Hot Articles

12 Creating Static File Handler

01 Jan 2025